In 2021, I collaborated with a pair of my co-workers and submitted an essay to the U.S. Naval Institute’s Coast Guard Essay Contest. Admittedly, I am not a great collaborater. I had trouble relinquishing control of exactly where our essay should go.

As if to teach me a lesson, the essay we entered in 2021 was awarded second prize.

1

But most importantly, that validation started some personal growth, where I began formally writing down my ideas.

I believe it is important to take the time to write down your thoughts and ideas. As you detail your thought process and defend your ideas through pen and paper, I believe one of two things occurs:

- you build support for your ideas, or

- your ideas are not as well supported as you may have believed.

Ideally, in the second scenario you begin to change your ideas. Or at least find that you do not want to speak to them with as much zeal and conviction in the future (i.e. you realize they are not as well supported as you thought they were).

Regardless, in 2022 I entered the same essay contest for the second time. I did not win, but I still find myself proud of my thoughts and their composition on paper. And this is what I entered:

Who is the Commandant’s Data Scientist?

Who is the Commandant’s data scientist? This question may seem to be an unproductive exercise. If you are a data scientist, and you are in the Coast Guard, are you not the Commandant’s data scientist? The truth is, the Coast Guard has been working around fully incorporating data scientists into decision-making since Big Data rose to prominence. This statement is not intended as a condemnation of the Coast Guard. In a hierarchical organization where decision-making is naturally deferred up the chain of command, there are simply no data scientists senior enough to influence decision-making. Similarly, after identifying a junior data scientist for a seat at the table in decision-making, that junior member must effectively punch up and ensure their voice is both heard and understood. But rather than asking junior data scientists to rise to the occasion, the Coast Guard turns to contractors for analysis. And these contractors often lack the operational experience of an internal Coast Guard data scientist, and similarly require additional coaching to understand and frame the Coast Guard’s problems. The scenario described results in continued procurement of flawed tools and analysis, segregation of data scientists from influencing decision-making, and finally, skill decay amongst the Coast Guard’s internal data science community.

Analysis and Tool Procurement

Both across the Coast Guard and across industry, there are endless references to “analysis”. In the acquisition life cycle alone, there is an Alternative Analysis (AA), an Analysis of Alternatives (AoA), a Capability Analysis Report (CAR), etc. The Coast Guard Operational Requirements Generation Manual (COMDTINST M5000.4) defines a CAR as, “An assessment of the Coast Guard’s ability to fulfill a mission, objective, or function.”

According to Merriam-Webster, an assessment is, “the action or an instance of making a judgment about something.”

2

Also, according to Merriam-Webster, an analysis is,

“A detailed examination of anything complex in order to understand its nature or to determine its essential features: a thorough study.”

3

And a CAR is a Capability Analysis Report.

This may seem like a trivial difference. Assessment or analysis, the acronym will be CAR either way. Nevertheless, as the fields of data and analysis grow the distinction between an assessment and an analysis is harder to ignore. And somehow, often, an assessment is pitched as an analysis. And seldom is it the other way around. Repeatedly upselling an assessment as an analysis does have an indirect cost. The indirect cost is always the devaluation of analysis.

An immediate effect of devaluation of analysis is churn of data scientists. And without data scientist talent internal to an organization, the organization is forced to outsource its analysis. But this scenario is a paradox. If there are no data scientists to perform an analysis, then there are also no data scientists to receive and assess an analysis (moving forward we will call this the “data scientist/analysis paradox”). Without an objective assessment of the mathematics behind an analysis’ conclusions, an organization is susceptible to confirmation bias. Which is to say, the organization will adjudicate along the lines of: this analysis is good because it affirms our hypothesis, or this analysis is bad because it contradicts our hypothesis. With this toss-up as a likely result when assessing analysis, it should be no surprise to hear distaste or dissatisfaction with analysis.

Dissatisfaction with analysis can also be attributed to a lack of analytic problem framing. Problem framing is one of the most important skills for a data scientist. The ability to translate a real-world problem into a feasible formulation, and then interpret a predictive or prescriptive solution in layman’s terms takes both a strong technical grasp of concepts (education) and familiarity and experience within the organization (business acumen). Which is why internal analytic talent is extremely valuable.

So, without data scientists, analysis is outsourced. But, because of the limited numbers of data scientists, it is seldom outsourced by a data scientist. And frequently this results in poor problem framing, which contributes to overall dissatisfaction with analysis. When identified, this sequence of events should be concerning. But it is equally as concerning when data scientist are left out of both custom and generic software tool procurements. Without problem framing for a software tool, the end procurement is a flawed tool. And flawed tools lead to frustration and additional tool procurements. In addition to the data scientist/analysis paradox, organizations can fall into a cycle of continual software procurements with equally dissatisfying results.

Segregation of Data Scientists from Decision-Making

The data scientist/analysis paradox is an easy scenario for an organization to find themselves entrapped within. Standard practice is rapidly accelerating towards incorporating some form of analysis into decision-making. But learning and then practicing analysis takes time. And the education system in the United States is not producing a workforce with a strong foundation in the mathematical concepts required for higher level analysis and machine learning.

Very few people would argue against the relevance of analysis. However, the upselling of assessments and various other products as analysis sends a different message to data scientists. Which results in data scientist churn from the organization and a dearth of analytic talent at senior levels. And the natural hierarchical structure of organizations results in the immediate segregation of data scientists from decisions.

Skill Decay Amongst Data Scientists

Math is hard. Personally, I am passionate about math. But if I do not practice calculus, I do not remember calculus. This example should make sense to the many people who have undergraduate calculus courses in their past, who would also cringe if asked to perform integration by parts. The skills required to inform a higher-level analysis are no different. If data scientists are not enabled to flex their knowledge in analysis, their competence slowly withers to skill decay. Similarly, if an organization desires to foster senior data scientists who have knowledge, experience, and organizational business acumen; senior data scientists who do not have to punch up to inform decisions, then that organization needs to ensure robust opportunity for analysis to its junior data scientists.

Therefore, outsourcing analysis to contractors is doubly marring for an organization. Not only will it introduce potential for the data scientist/analysis paradox, but it also deprives the organization’s junior data scientists of opportunity to further their knowledge, experience, and organizational business acumen.

Outsourcing analysis opportunities, removing data scientists from decision-making processes, and data scientist skill decay are deeply interconnected topics. There are different ways one of the three impacts one or both remaining tenets. Exactly how these interconnected ideas can compound is likely best displayed using an example.

Coast Guard COVID-19 Response

In mid-to-late March of 2020, non-essential businesses and services across the United States were shut down. Shortly thereafter, the Coast Guard stood up its COVID Crisis Action Team (CCAT). My supervisor volunteered to be part of the CCAT Data Analytics Cell (DAC). And for me and the remaining data scientist on our team, this meant both sustained and one-off analysis opportunities in support of the Coast Guard’s COVID-19 response.

The Flawed Procurement: Coast Guard Personnel Accountability and Assessment System

On April 17, 2020, the Coast Guard released its initial requirements for COVID-19 case reporting and workforce status reporting.

These requirements were disseminated through an ALCOAST (141/20) message, requiring all active-duty and reserve personnel on active-duty orders to report personal statuses within the Coast Guard Personnel Accountability and Assessment System (CGPAAS).

4

Within ALCOAST 141/20 the Coast Guard defined personal statuses: Unaffected, Quarantined, Isolated, Hospitalized and Released. As an example, the word for word definition for an isolated personal status was:

Isolated - Member has been:

- diagnosed with COVID-19 or presumed COVID-19 positive awaiting test results and

- separated from others who have not been exposed.

The Coast Guard procured a live database; a database continually edited by traffic with changes in real time. The Coast Guard needed a live database for COVID-19 response. It enabled senior leaders to instantly know what percentage of the workforce is unaffected, isolated, etc. But the Coast Guard needed additional capabilities beyond a live database. The Coast Guard needed a way to track personnel who contracted COVID-19. How long were they isolated? When did they become ill? At what point did they recover and return to the workforce?

Initially, this capability gap was closed by personnel on the CCAT. Each day, members downloaded a snapshot of the live database. Then, members updated a running list of COVID-19 cases with new cases identified in the snapshot. The Coast Guard submitted reporting for COVID-19 cases to the Department of Homeland Security (DHS), thus DHS requirements drove what constituted a Coast Guard COVID-19 case. And DHS required counts of COVID-19 cases confirmed with a positive test result.

So less than a month after the initial requirements for COVID-19 case reporting were published, on May 15, 2020, through ALCOAST 174/20, the Coast Guard updated the personal status definitions.

5

The isolated personal status definition was updated to:

Isolated - Member has been both:

- confirmed COVID-19 positive via a COVID-19 test or clinically diagnosed with COVID-19 by a healthcare professional and

- separated from others who have not been exposed.

When the tool the Coast Guard procured did not meet DHS’s requirements, the Coast Guard tasked an individual entity (the CCAT) to close the capability gap, and then increased the manual reporting responsibilities placed upon its entire workforce.

Personnel on the CCAT comparing one snapshot of approximately 60,000 personnel to a running list of COVID-19 cases was an unsustainable solution. Tasked by our supervisor on the CCAT DAC, on May 26, 2020, my fellow data scientist and I implemented a Python script to best automate the tedious process. However, the flaw in the Coast Guard’s procured solution remained. The most granular attributes available to narrow the 60,000-person snapshot to COVID-19 cases were “Isolated” and “Hospitalized” personal statuses. However, these personal statuses do not guarantee a COVID-19 case is confirmed via a positive test (many are clinically diagnosed). Therefore, the process required a data scientist to examine the notes of each potential new COVID-19 case, to determine if it was confirmed via a positive test or not.

The Problem: Removal of Data Scientists from Decision-Making

Through surf and storm and howling gale, the Coast Guard moves forward. So did the CCAT. In July of 2020 the CCAT experienced a transition. Many of the original volunteers departed due to permanent change of station orders associated with the assignment year. And the remaining volunteers were replaced by more permanent and sustainable solutions. Except for the DAC. The DAC remained volunteer and was downsized to a singular member who could help with and farm out the CCAT’s analytic needs to willing data scientists. The process where one of two data scientists ran daily COVID-19 case numbers for the Coast Guard was never relieved or replaced.

I am confident the original members of the CCAT understood and accepted the flaws behind the COVID-19 case numbers. Originally, they identified the new cases. And then, as the script solution was implemented, my fellow data scientist and I raised our concerns. But I cannot know if these concerns were communicated in a pass down or relief on the CCAT. Data scientists supporting the CCAT became names behind an e-mail delivery the CCAT required. And as DHS and senior leadership developed questions that required a deeper understanding of the data, the questions were communicated to us second-hand, both through e-mails and phone calls, and often from multiple different CCAT members (each thinking they were independently seeking the same outcome).

Essentially, the Coast Guard removed data scientists from the decision-making process. It is not my intention to assert that data scientists should be empowered to make all decisions in the organization. Howbeit, the current status quo is regressive. A senior leader asks a question, and the question is communicated to a data scientist by someone else. The data scientist then informs whoever communicated the question. And that person then communicates the answer back to senior leaders. From the perspective of a data scientist, you may never know if the subtleties and complexities of your concerns were effectively repeated.

The Problem Compounded: Data Scientists Removed from Analysis

The data scientist on my team and I were never afforded the opportunity to work on the CCAT’s analysis full-time. COVID-19 analysis became a collateral duty on top of the collateral duties already assigned to us. This in and of itself is not a large mis-step. However, when asked to address a flawed procurement, data scientists should be enabled with adequate time to address the shortcomings. With data scientists removed from the decision-making process, are senior leaders being informed about how the timelines attached to an analysis request impact the depth and level of accuracy the data scientists can provide?

I am inclined to think senior leaders are not given these explanations. The Coast Guard’s COVID-19 case numbers display this. The Coast Guard obviously saw value in tracking COVID-19 cases that were clinically diagnosed without receiving confirmation from a positive test, because the Coast Guard procured a tool that sorted clinical diagnosis in the same category as cases confirmed via a positive test (i.e. isolated). This makes sense, as a clinically diagnosed member is equally as unavailable to the workforce as a member whose case is confirmed with a positive test. But again, the Coast Guard removed the data scientists closest to the data from the decision-making process. Then, the Coast Guard compounded the problem by not allowing time to address the shortcomings of the procured tool.

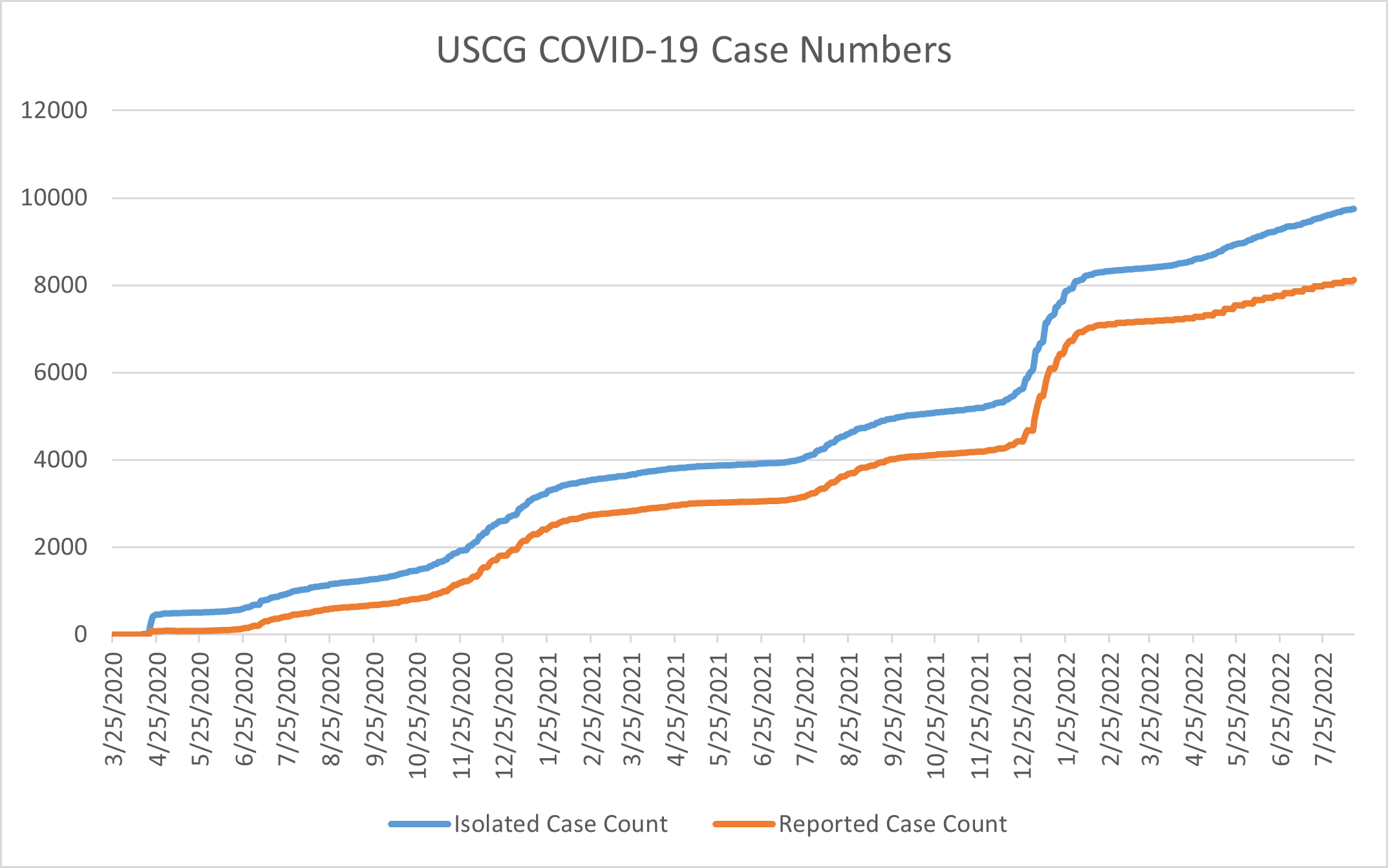

Over the course of two years a small gap can grow to become a large one. The COVID-19 cases reported to DHS and Coast Guard senior leaders remained the filtered down case counts (i.e. confirmed via a positive test). Completely separately and unreported, the COVID-19 cases that resulted in a member who is unavailable to the workforce (i.e. personnel with an isolated personal status) rose. At the time of writing the gap between these two COVID-19 case counts widened to a difference over 1,600 cases. To put this value into perspective, at the time of writing the Coast Guard reported 8,128 cases. This error amounts to nearly twenty percent (~20%) of the total number of cases reported.

The Solution: Who is the Commandant’s Data Scientist?

Corrections to the three problems are reasonable, actionable, and interrelated. To avoid the data scientist/analysis paradox, ensure data scientists are present to both frame and receive contracted analysis and analytic procurements. This is interrelated to solving the second problem, which necessitates including data scientists (at both the senior and junior levels) in decision-making processes. And each of the first two problems are interrelated to the third problem, ensuring data scientists are given analysis opportunities to combat skill decay.

Referencing Occam’s Razor:

“The simplest solution is almost always the best [solution].”

6

A simple solution would be to pair data scientists with admirals, like (or perhaps as) admiral’s aides. Exactly as it happens between admirals and their aides currently, data scientists and admirals could form a relationship of trust. All three problems would begin to be addressed by not contracting analysis and enabling internal data scientists to tackle the Coast Guard’s largest questions. Paired with an admiral, a data scientist would be right there to advocate, “Analysis can inform this decision. Let’s do this analysis internally.” And perhaps in the future when a procurement, strategy or decision-making process does not make sense, the workforce will ask, “Who is the Commandant’s data scientist?”

These views are mine and should not be construed as the views of the U.S. Coast Guard.

-

https://www.usni.org/magazines/proceedings/2021/august/path-data-driven-coast-guard ↩︎

-

https://www.uscg.mil/Coronavirus/Information/Article/2155366/alcoast-14120-covid-19-reporting-for-coast-guard-personnel-accountability-and-a/#:~:text=April%2017%2C%202020-,ALCOAST%20141%2F20%20%2D%20COVID%2D19%3A%20REPORTING%20FOR%20COAST,ACCOUNTABILITY%20AND%20ASSESSMENT%20SYSTEM%20(CGPAAS)&text=1. ↩︎

-

https://www.uscg.mil/Coronavirus/Information/Article/2191191/alcoast-17420-covid-19-reporting-for-coast-guard-personnel-accountability-and-a/ ↩︎

-

https://www.interaction-design.org/literature/article/occam-s-razor-the-simplest-solution-is-always-the-best#:~:text=Occam's%20Razor%2C%20put%20simply%2C%20states,many%20great%20thinkers%20for%20centuries. ↩︎